Reputations in Crowdsourcing

About

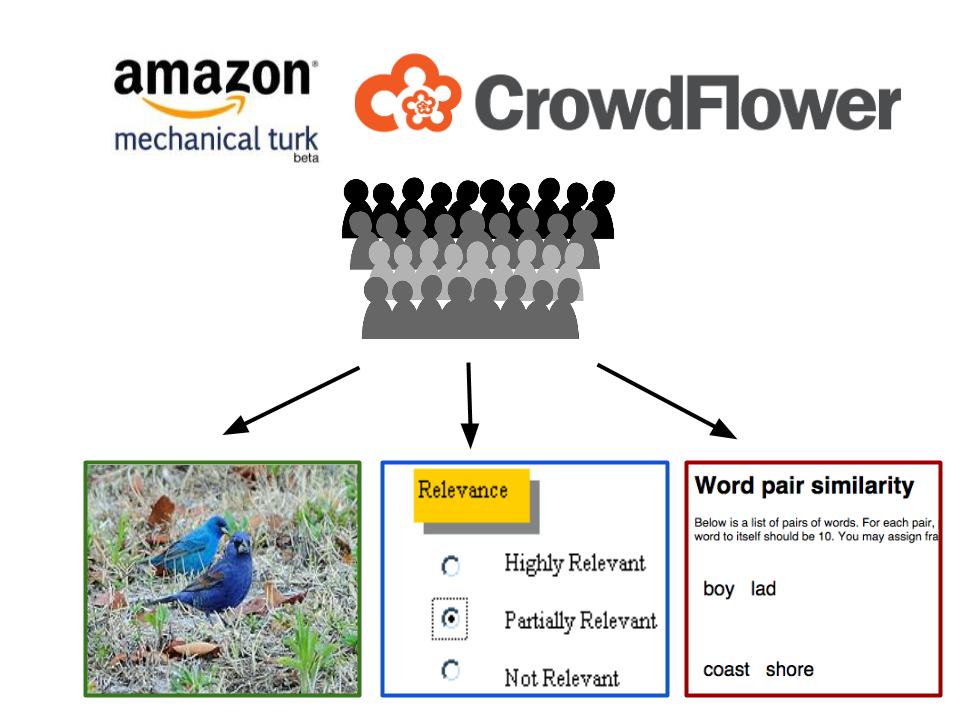

The growing popularity of online crowdsourcing services like Amazon Mechanical Turk, CrowdFlower and citizen science projects like FoldIt, Galaxy Zoo have made it very easy to leverage the power of the masses to tackle complex tasks that cannot be solved by automated algorithms and systems alone. One of the most popular crowdsourced tasks is data annotation for machine learning applications - image labeling, transcribing speech etc. The goal here is to aggregate the responses from the crowd to determine the underlying (unknown) ground-truth of the data. However, these applications are vulnerable to noisy labels introduced either unintentionally by unreliable workers or intentionally by spammers and malicious workers. Therefore it is important to identify the underlying "quality" of any worker so that we can determine which workers' labels we can trust. This problem is challenging due to the following reasons: (a) Workers are often anonymous and transient and might be difficult to track over time (b) Workers can have varying expertise, beliefs, interests and incentives (c) Some tasks might be more difficult than others so that the notion of quality might need to be task-specific.

We propose to address this problem via a reputation system for crowd-workers. Intuitively, a worker has high reputation if she performs the tasks "honestly" as opposed to spamming or providing malicious labels. For instance, it has been shown that a lot of workers complete the tasks in a short period of time by providing uniform or random labels, just to earn the payment associated with completing the task. These workers don't have any incentive to do the tasks diligently and are only motivated by the payment reward. Further, in crowdsourced-review sites like Amazon, Yelp etc., businesses have incentives to hire people to post fake reviews to boost up their own rating as well as degrade the ratings of competitors. Both these kinds of workers should be assigned a low reputation. Our goal is to design efficient algorithms for computing worker reputations in crowdsourcing systems.

Publications

Reputation-based Worker Filtering in Crowdsourcing [pdf]

Srikanth Jagabathula, Lakshminarayanan Subramanian and Ashwin Venkataraman

Advances in Neural Information Processing Systems (NIPS) 27, 2014

People

Ashwin Venkataraman

Courant Institute of Mathematical Sciences, New York University

Srikanth Jagabathula

Stern Business School, New York University

Lakshminarayanan Subramanian

Courant Institute of Mathematical Sciences, New York University

Center for Technology and Economic Development, NYUAD